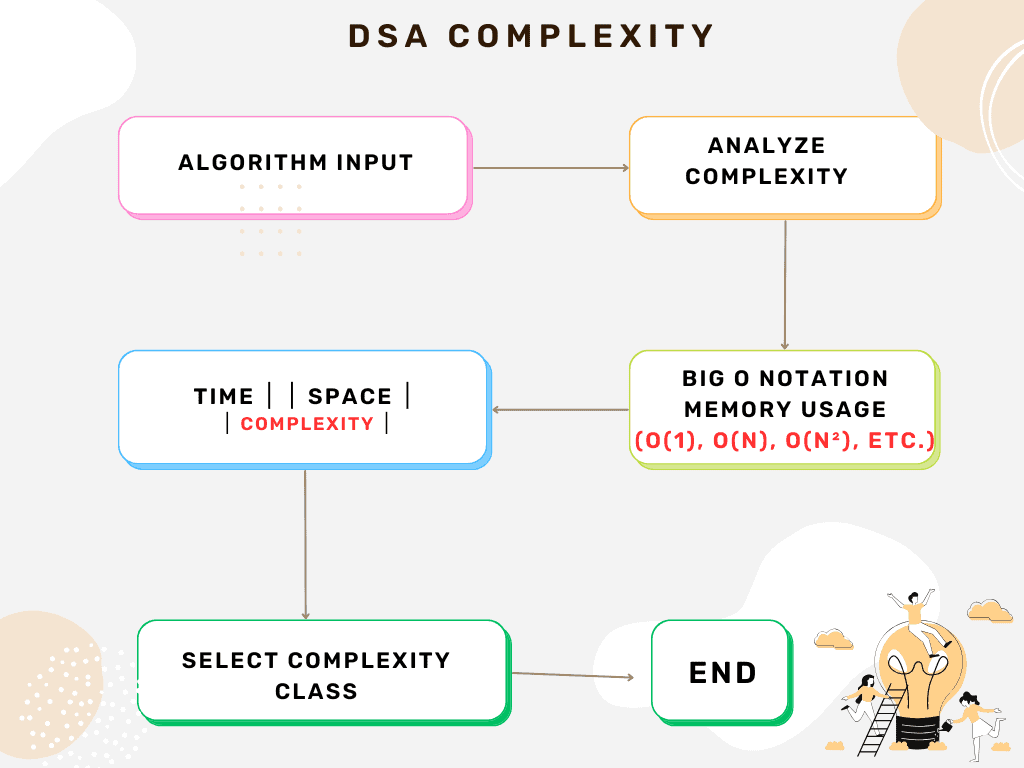

Introduction to Complexity in DSA

When designing and analyzing algorithms, understanding complexity is crucial. Complexity determines the efficiency of an algorithm in terms of time (time complexity) and space (space complexity). Python, being a widely used programming language, provides an excellent platform for implementing and analyzing these complexities.

Types of Complexity

- Time Complexity: Measures the amount of time an algorithm takes to execute as a function of input size (n).

- Space Complexity: Measures the amount of memory required by an algorithm to run as a function of input size.

Time Complexity Analysis

Time complexity is usually expressed using Big-O Notation, which describes the upper bound of an algorithm’s running time.

Common Time Complexities:

- O(1) – Constant Time: The execution time remains the same regardless of input size.

def constant_function(arr):

return arr[0] # Always takes the same time

2. O(log n) – Logarithmic Time: The execution time increases logarithmically.

import math

def log_function(n):

while n > 1:

n = n // 2 # Reduces problem size by half

3. O(n) – Linear Time: The execution time grows linearly with the input size.

def linear_function(arr):

for item in arr:

print(item)

4. O(n log n) – Linearithmic Time: Common in sorting algorithms like Merge Sort.

def merge_sort(arr):

if len(arr) > 1:

mid = len(arr) // 2

left_half = arr[:mid]

right_half = arr[mid:]

merge_sort(left_half)

merge_sort(right_half)

# Merging process (omitted for brevity)5. O(n^2) – Quadratic Time: Nested loops cause execution time to grow quadratically.

def quadratic_function(arr):

for i in arr:

for j in arr:

print(i, j)

6. O(2^n) – Exponential Time: Typically occurs in recursive problems like Fibonacci sequence.

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

7. O(n!) – Factorial Time: Found in brute-force problems like the Traveling Salesman Problem.

Space Complexity Analysis

Space complexity focuses on the additional memory used by an algorithm apart from the input data. It includes:

- Auxiliary space: Extra space needed for computations.

- Recursive stack space: Space taken by function call stacks.

Example of Space Complexity:

- O(1) – Constant Space:

def constant_space(n):

result = 0 # Uses a fixed amount of space

return result2. O(n) – Linear Space:

def linear_space(n):

arr = [0] * n # Space grows linearly with input size3. O(n) – Recursive Stack Space:

def recursive_function(n):

if n == 0:

return

recursive_function(n-1) # Recursion uses stack space

Conclusion

Understanding complexity in DSA helps in choosing efficient algorithms. While Python simplifies implementation, optimizing time and space complexity is essential for scalable applications. By analyzing Big-O notation, one can ensure the best performance when solving computational problems.